Somewhere right now, software is making decisions about your life. Not recommendations for what to watch or buy, but consequential predictions about whether you’re a security threat, a credit risk, or someone worth investigating.

Algorithms are forecasting the next crime in your neighborhood, the next terrorist attack at a border crossing, or which hospital beds will be needed during tomorrow’s emergency. And increasingly, one company sits at the center of this predictive machinery: Palantir Technologies.

You’ve probably never used Palantir’s software directly. You can’t download it from an app store or sign up for a free trial. Yet this secretive Silicon Valley giant, backed by contrarian billionaire Peter Thiel, has quietly embedded itself into the decision-making infrastructure of governments, militaries, corporations, and institutions worldwide.

Palantir doesn’t just analyze what happened yesterday. It predicts what happens next, then helps its clients act on those predictions before events unfold. In essence, it’s writing futures that haven’t been lived yet.

This is the story of how one company learned to see around corners, and what it means when the future becomes something that can be calculated, optimized, and controlled.

The Vision: Peter Thiel’s Bold Experiment in Connected Intelligence

The idea behind Palantir emerged from a haunting question: What if we could have prevented 9/11? Intelligence agencies possessed fragments of information that, if connected, might have revealed the plot. But the data sat in disconnected systems, trapped in bureaucratic silos, invisible to the analysts who needed it most. Peter Thiel and his co-founders, including Alex Karp, saw an opportunity not just to connect past data, but to use it to predict future threats.

Founded in 2003, Palantir brought Silicon Valley’s technological firepower to the world of intelligence and national security. What made Palantir different from traditional government contractors was its approach to prediction. Instead of building rigid systems that simply stored information, Palantir created adaptive platforms that could ingest any data source, identify patterns humans couldn’t see, and surface connections that pointed toward future events.

An analyst could ask: “Show me everyone connected to this suspicious transaction who traveled to these locations in the past six months.” The software would reveal a network that might indicate a future attack.

This wasn’t just data analysis. It was prediction, anticipation, and increasingly, preemption. Palantir promised to help its clients see the future before it arrived.

The Machine: How Palantir Predicts Tomorrow

Understanding how Palantir shapes futures requires looking inside its platforms. The company’s two core products, Gotham (for government) and Foundry (for commercial clients), function as predictive engines that turn historical patterns into future probabilities.

Imagine a military commander planning operations in a hostile region. Traditional intelligence might tell them where enemies were yesterday. Palantir’s software goes further: by analyzing movement patterns, supply chain data, communications metadata, and environmental factors, it predicts where enemies will be tomorrow. It models scenarios, simulates outcomes, and recommends actions based on what it forecasts will happen.

Or consider a corporation using Palantir’s Foundry platform. The software doesn’t just report last quarter’s supply chain performance. It identifies emerging bottlenecks before they cause delays, predicts equipment failures before they happen, and flags fraud patterns before significant losses occur. Companies can intervene in their own futures, preventing problems that haven’t yet materialized.

Palantir’s newest platform, AIP (Artificial Intelligence Platform), supercharges this predictive capability by deploying machine learning models at scale. AI doesn’t just find patterns; it learns which patterns reliably predict future events, then automates responses. The system becomes a closed loop: predict, act, learn from outcomes, predict better next time.

In law enforcement and immigration, the implications grow more controversial. Predictive policing systems powered by Palantir analyze crime patterns to forecast where offenses will occur, directing patrols to specific neighborhoods before crimes happen. Immigration and Customs Enforcement uses Palantir to predict which individuals might be undocumented, who might miss court dates, and where to allocate enforcement resources. These predictions determine who gets stopped, questioned, detained, or deported.

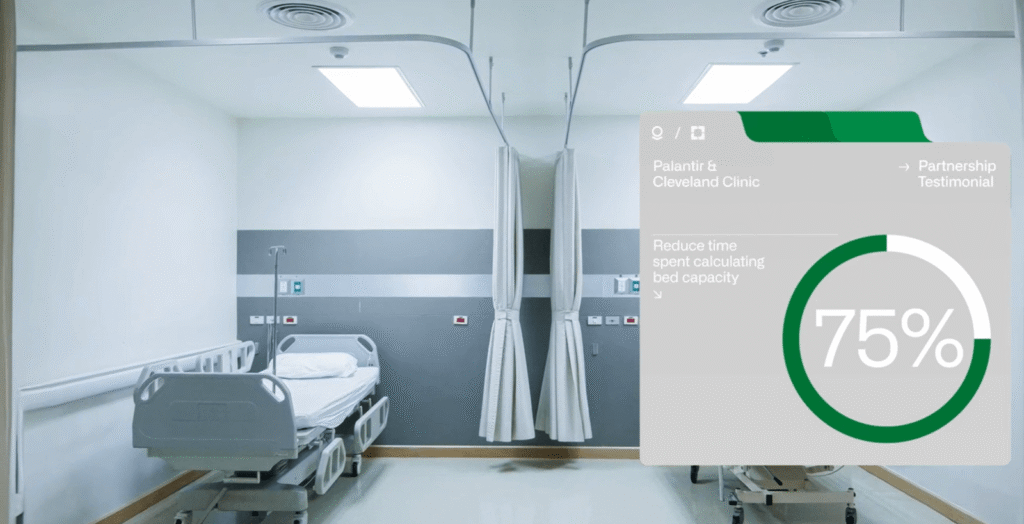

In healthcare, hospitals use Palantir to predict patient surges, disease outbreaks, and resource shortages before they become crises. During the pandemic, some health systems deployed Palantir to forecast ICU bed needs and ventilator requirements, literally predicting who might need life-saving equipment before they got sick.

This is where the power becomes almost unsettling. Palantir’s software doesn’t passively observe reality. It actively shapes it by triggering interventions based on predictions.

The Controversy: Who Decides Your Future?

This predictive power raises profound ethical questions. When algorithms forecast futures and trigger preemptive actions, who’s accountable if the predictions are wrong? What happens when predictions become discriminatory or when they amplify existing biases?

Palantir’s rise has sparked fierce controversy. Civil rights advocates argue the software enables mass surveillance and erodes privacy protections. Its use in immigration enforcement has drawn protests over family separations, while policing applications raise concerns about algorithmic bias and over-policing of minority communities.

The ethical questions cut deep: Should private companies provide tools governments use to monitor citizens? Does security justify privacy erosion? Who’s accountable when powerful software is misused? These questions have real consequences for real people.

Palantir’s defense remains consistent: they provide tools, but clients decide how to use them. The company claims it builds safeguards into its software, leaving usage decisions to democratic institutions. Thiel argues that strong defense capabilities are essential for free societies, and abandoning these fields to less scrupulous actors would be worse.

Critics find this position insufficient, arguing that building surveillance capabilities while disclaiming responsibility is ethically hollow. As Palantir’s technology grows more powerful and widespread, this unresolved debate becomes increasingly urgent.

Riding the AI Wave: Palantir’s Bet on the Future

Palantir’s evolution reveals two acts. First, connecting scattered data to reveal insights. Now, harnessing artificial intelligence to detect patterns humans cannot see and automate decisions at machine speed. As AI reshapes industries, Palantir has positioned itself at the transformation’s center.

Financial results show explosive growth. Revenue has surged from expanding government contracts and commercial sector success. U.S. commercial revenue has grown at triple-digit rates annually, proving Palantir has transcended its government contractor roots. Wall Street has responded enthusiastically, making it a favorite among AI and defense investors.

Yet questions persist. Analysts debate whether Palantir’s high valuation is justified given government dependency and ethical controversies. Competition from enterprise giants and AI startups intensifies. As AI capabilities commoditize, Palantir must prove its irreplicable value.

One truth emerges: Palantir has become essential infrastructure for organizations deploying AI across manufacturing, finance, healthcare, and energy, making itself indispensable to modern institutions.

Who Controls Tomorrow?

Palantir’s story ultimately forces an uncomfortable reckoning: What happens to human agency when futures are pre-written by algorithms? When software predicts crime, allocates resources, determines risks, and recommends actions before events unfold, do we still control our destinies? Or have we quietly outsourced tomorrow to machines and the institutions commanding them?

Your future is being calculated right now in data centers you’ll never access, by software you’ll never see. Palantir has mastered seeing around corners. The rest of us must decide whether we want to live in a world where someone else has already determined what’s coming.